Codespec

Optimizing an AI-powered user experience

Overview

Codespec is an online programming practice environment where learners of all levels can solve each problem 5 ways. For this project, I employed informal interviewing, user-centered design thinking, AI-powered prototyping, and usability testing to transform a confusing content authoring flow into a guided collaboration experience between computer science instructors and GenAI.

Where: Codespec / 2025

My Role: As co-founder and a solo designer, I iterated on a critical UX challenge and presented a recommendation to my co-founder.

Note: This is a functional prototype built with HTML, CSS, and JavaScript to demonstrate the user interface and flow.

Suboptimal GenAI Workflow

Codespec offers instructors a way to create problems with the help of generative AI, but the process was confusing to them. Key issues included:

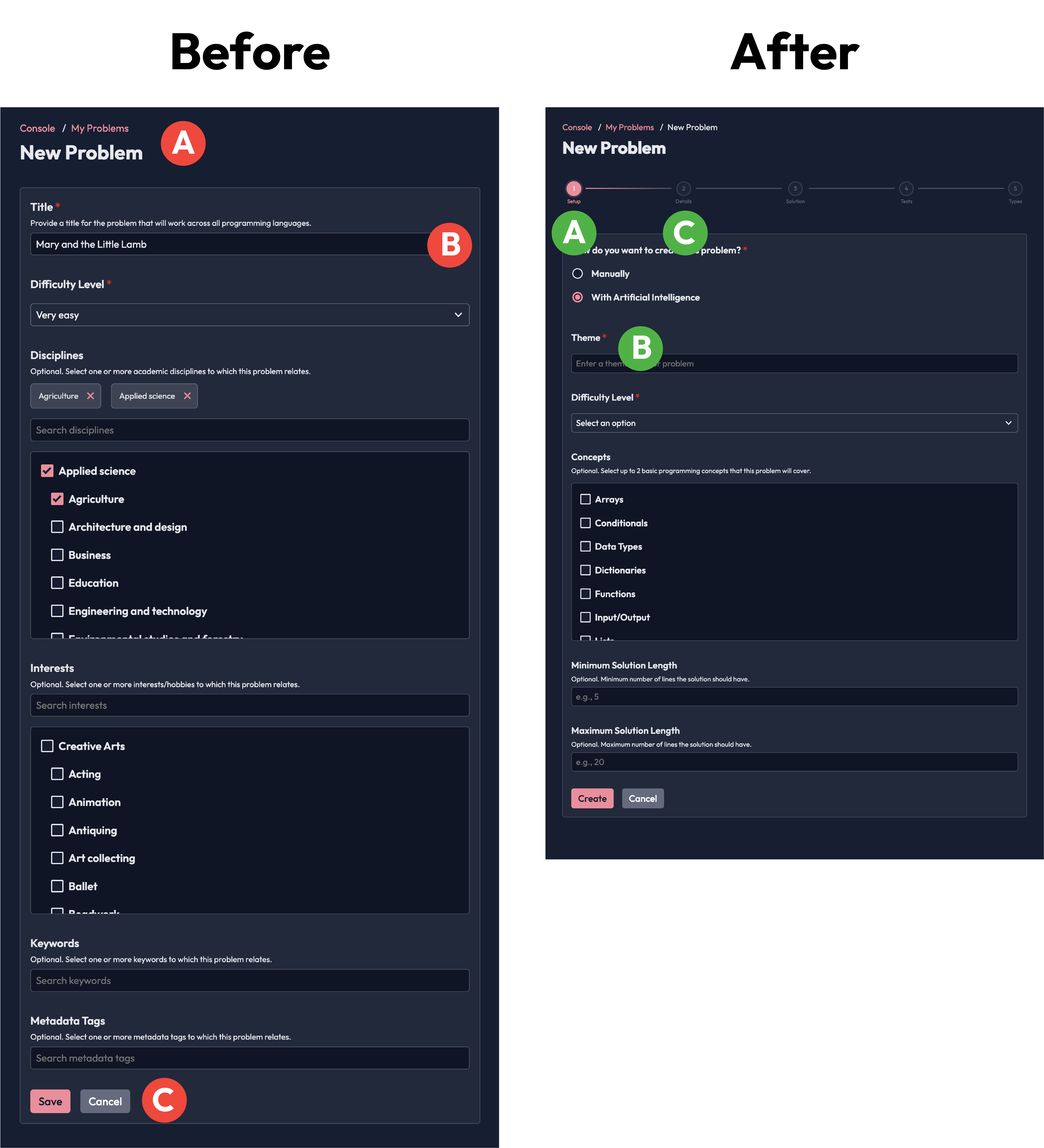

A. Lack of context in the problem creation flow: "What step am I on?"

B. Mismatch between which content the user needed to provide vs GenAI: "Can't I just provide a theme and have GenAI create the title/instructions?"

C. Non-linear/unclear steps in the workflow: "Where do I go next?"

Collect V1 Feedback

In order to improve upon the first version of the workflow, I had to understand its strengths and weaknesses. So I interviewed a researcher who had used it, asking non-leading, open-ended questions.

I also clicked through the workflow myself with mindset of a user who was brand new to the platform.

Define Personas

Armed with a list of painpoints, I drafted personas in which to ground my design.

Despite differences in programming knowledge, both user types are open to leveraging GenAI tools and would like to maximize output while minimizing time investment.

Delegate responsibilities

The researcher I first interviewed was dissatisfied with the GenAI authoring experience. They wanted a more collaborative flow where they provided basic context (e.g., difficulty, theme, programming concepts) and GenAI took a pass at the rest.

I responded by breaking the parts of a problem down into separate pieces, and determining which were more appropriate for users to specify vs GenAI.

Map the workflow

While the researcher wanted GenAI to take a first pass at writing the problem, they still wanted to retain control throughout the process to ensure pedagogical quality.

So I mapped a user journey that reflected a prompt-generate-review cycle, keeping the author in the driver's seat at all times.

Sketch Wireframes

With this flow in mind, I sketched out each screen. I imagined a linear wizard-like experience, where each step focused on a few key pieces about the problem.

I wanted the interface to feel approachable and clean, so authors could focus on the content rather than trying understand how to use the system.

Prototype with Claude Code

To bring the design to life, I collaborated with Claude in small, specific, example-rich steps.

I stated my end goal up front, but emphasized the importance of working incrementally:

"Using HTML, CSS, and JavaScript, I want to prototype a wizard flow for authoring programming practice problems with GenAI. The interface should look and feel like Codespec's production website (https://www.codespec.org). Let's create the prototype step by step.

Start by retrieving Codespec's stylesheet and JavaScript files, and saving them to a folder on my computer. Link to them from an index.html file in the same folder. You can create boilerplate HTML structure (head, body, main) but nothing else yet."

Validate the Redesign

Once the prototype was complete, I went back to the researcher I first interviewed and asked them to click through it. Along the way I prompted them to share their first impressions of each step.

Qualitative feedback indicated the redesign met the primary goals of:

A. more clearly showing instructors where they're at in the overall creation flow

B. balancing efficiency and quality control in the GenAI-powered authoring process

C. more clearly guiding instructors to the appropriate next step

Better collaboration, faster authoring

Using informal interviewing, user-centered design thinking, AI-powered prototyping, and usability testing, I transformed a confusing content authoring flow into a guided collaboration experience between computer science instructors and GenAI.

Note: This is a functional prototype built with HTML, CSS, and JavaScript to demonstrate the user interface and flow.